I wrote in the past some articles about how it’s important to use Azure Storage for storing files in a Dynamics 365 Business Central project (avoid using blobs and media as much as possible) and I’ve talked about the differences between Azure Blob Storage and Azure File Share, also providing a solution for mapping an Azure File Share as a local drive for data exchange with Dynamics 365 Business Central (link here).

Azure Files is specifically a file system in the cloud. Azure Files has all the file abstracts that you know and love from years of working with on-premises operating systems. Like Azure Blob storage, Azure Files offers a REST interface and REST-based client libraries. Unlike Azure Blob storage, Azure Files offers SMB access to Azure file shares. By using SMB, you can mount an Azure file share directly on Windows, Linux, or macOS, either on-premises or in cloud VMs, without writing any code or attaching any special drivers to the file system. You also can cache Azure file shares on on-premises file servers by using Azure File Sync for quick access, close to where the data is used.

I also wrote an article about best practices for handling an Azure File Share instance, you can read it here.

But now there’s an interesting news…

Starting from Dynamics 365 Business Central 23.3 release and thanks to a community contribution, a new native Azure File Share AL module is available.

This module was created with the same pattern as the Azure Blob Storage AL module and it permits to read/write files to an Azure File Share instance.

The module uses the common Interface “Storage Service Authorization” for authentication with the storage account. For authentication, you can use a shared key or a SAS token.

Then it adds some operational codeunits like codeunit “AFS File Client”, codeunit “AFS Operation Response” and codeunit “AFS Optional Parameters” and a table called “AFS Directory Content” for handling the files in the storage.

Using the new module is quite similar to using the Azure Blob Storage AL module.

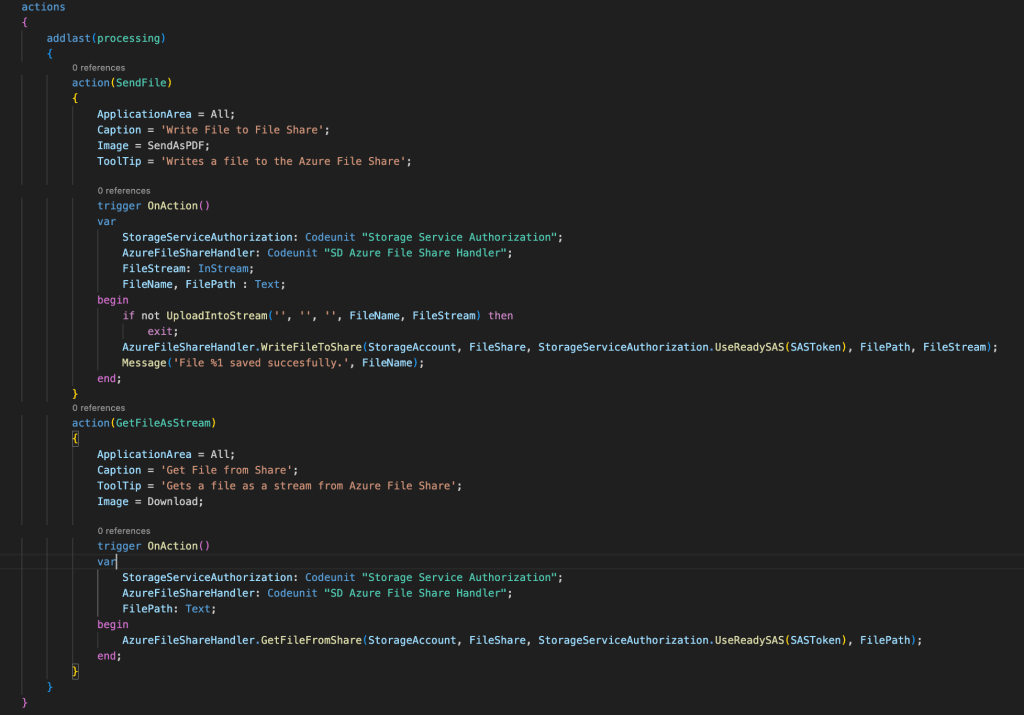

Here is an example of actions for writing and reading a file from the Azure File Share in AL code:

The “SD Azure File Share Handler” codeunit is a custom codeunit where I’ve defined the methods that uses the new Azure File Share AL module.

Here is the method that writes a file to the Azure File Share:

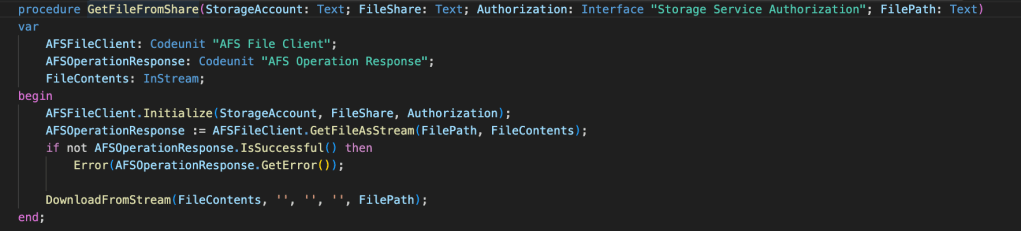

Here is the method that reads a file from the Azure File Share and downloads it to the client:

The codeunit “AFS File Client” contains all the methods for working with your Azure File Share instance:

As sais before, Azure File Share can be mounted as a drive in your local machine and this gives you a local drive in synch with the cloud, with full SMB protocol support. This means that you can create folders, subfolder and files in your local drive and these are synched online (and vice-versa).

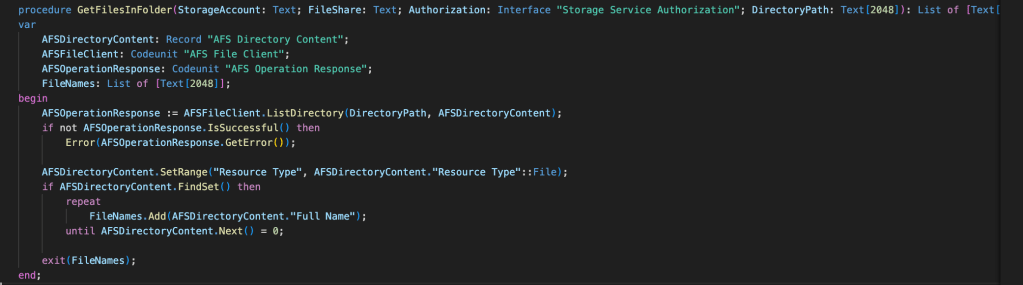

Here is an example of AL code that lists all files in an Azure File Share folder and loads them into a List object:

This is absolutely a great addition that enrich the file support in SaaS. I suggest to start using it…

Hi Stefano, this is a great addition. I have tried all three functions but cannot get the create file to work. It returns a 403 error. Did you have come across this when testing it?

LikeLike

Has the SAS token the write permission on the file share?

LikeLike

I am using Shared Key access instead of an SAS token.

LikeLike

I have the same issue with creating/writing the file. Tried both SAS and shared key. Was able to read and list files, but not save when using shared key. SAS basically didn’t work at all. Wondering if there was any progress on this one.

LikeLike

Had same issue. Resolved by creating the SAS Token using Azure Storage Explorer… Creating the Token from within Azure leaves out the SR parameter.

LikeLike

Hi Stefano,

Thanks your post. It is a great solution.

I could implement it, but I have an issue.

I am using ListDirectory

AFSOperationResponse := AFSFileClient.ListDirectory(DirectoryPath, AFSDirectoryContent, AFSOptionalParm);

There is a limitation that give back only the first 5000 hits.

Somehow I need to get back the NextMarker value.

I think it is:

https://learn.microsoft.com/en-us/dotnet/api/microsoft.azure.storage.file.protocol.listfilesanddirectoriesresponse.nextmarker?view=azure-dotnet-legacy

and then need to continue to get the next 5000 files and the next 5000, … so on

I can not get this value and it seems the AFSOptionalParm doesn’t work also.

AFSOptionalParm: Codeunit “AFS Optional Parameters”;

Do you have idea how should manage this to get back the all files?

We have more than 20.000 files in our folder.

thank you, Tamas

LikeLike

This is an interesting scenario, I think this is not handled at the moment in the AFS module. Can you post how you used the Codeunit “AFS Optional Parameters” ?

LikeLike

I think yes. You are true because I see in System extension that the code is there but it is internal. So they haven’t implemented yet.

AFS (Azure File Share): I added e.g. MaxResults but doesn’t work (AFSOptionalParm)

AFSFileClient.Initialize(RecLinksMappingSetup.StorageAccount, RecLinksMappingSetup.Container, StorageServiceAuthorization.CreateSharedKey(RecLinksMappingSetup.SharedKey));

AFSOptionalParm.MaxResults(1000);

AFSOperationResponse := AFSFileClient.ListDirectory(DirectoryPath, AFSDirectoryContent, AFSOptionalParm);

if AFSOperationResponse.IsSuccessful() then begin

AFSDirectoryContent.SetRange(“Resource Type”, AFSDirectoryContent.”Resource Type”::File);

if AFSDirectoryContent.FindSet() then begin

and AFSResponse.GetNextMarker is not available.

AFS is better then ABS (Azure Blob Storage) because the user can map as drive.

There is not native solution to map ABS to Microsoft system.

ABS:

I could set the filters (e.g. 20 files) and the Response is able to give back the GetNextMarker, So I know where I have to continue the loop for more files from the folder:

Authorization := StorageServiceAuthorization.CreateSharedKey(RecLinksMappingSetup.SharedKey);

ABSBlobClient.Initialize(RecLinksMappingSetup.StorageAccount, RecLinksMappingSetup.Container, Authorization);

ABSOptParam.MaxResults(20);

Response := ABSBlobClient.ListBlobs(Content, ABSOptParam);

if Response.IsSuccessful() then begin

MarkerText := Response.GetNextMarker();

Thanks

LikeLike

i hope someone in here can help me out.

I created an azure fileshare. in this Fileshare i created folders and within the folders the files i want to read.

now using the GetFileAsText of the Codeunit “AFS File Client” function works just fine.

but as soon as I use the function “ListDirectory” i get the error that the directory “ToBC” cannot be opened. But that is the actual folder name.

Anyone got any ideas what I am doing wrong?

LikeLike

just ignore me…. sometimes you just don’t see the most obvious. Mixed up storage account name and fileshare name 😉

LikeLiked by 1 person

I really need help though with the procedure CopyFile(SourceFileURI: Text; DestinationFilePath: Text) in the AFS File Client codeunit.

I successfully read one file from the fileshare lets say it is ToBc/test.txt.

After I’ve done reading i want to copy the file to a backup folder in the same fileshare: ToBc/Backup/test.txt

so I am calling the procedure like this: CopyFile(‘ToBc/test.txt, ToBc/Backup/test.txt)

and i always get the error: 400 The value for one of the HTTP headers is not in the correct format.

Does anyone know what I am doing wrong? Am Trying to get the CopyFile fuction to work for like over an hour. The SAS Token does have permission for this.

I’ve also tried to leave out the filename in the second parameter, but with no luck

procedure just in case someone wants to see it to be able to help:

local procedure AFSMoveFile2SubDirectory(var pAFSDirectoryContent: Record “AFS Directory Content”; pAzureFilesharePath: Record “WDG Azure Fileshare Path”): Boolean

begin

gcuAFSOperationResponse := gcuFileClient.CopyFile(pAFSDirectoryContent.URI, pAzureFilesharePath.”Backup Path”+ ‘/’ + pAFSDirectoryContent.Name);

if gcuAFSOperationResponse.IsSuccessful() then begin

gcuAFSOperationResponse := gcuFileClient.DeleteFile(pAFSDirectoryContent.URI);

exit(gcuAFSOperationResponse.IsSuccessful());

end else begin

Error(gcuAFSOperationResponse.GetError());

exit(false);

end;

end;

LikeLike

Hello Daniel,

How looks like the values in gcuFileClient.CopyFile?

pAFSDirectoryContent.URI = ?

pAzureFilesharePath.”Backup Path”+ ‘/’ + pAFSDirectoryContent.Name =?

Thanks, Tamas

LikeLiked by 1 person

Hi Tamas,

as described above Copyfile has 2 parameters sourcefilepath and destinationfilepath. source: ‘ToBc/test.txt’ and destination ‘ToBc/Backup/test.txt’

The same Issue is with the renamefile procedure, can’t get that to work either.

So I implented it with the createfile and putfile function.

But now my customer requested a rename function. I dont want to delete and create it again, so it sucks that copy and renaming doesn’t really seem to work

LikeLike

ok so I studied the API and finally got copylink to work. The SourcefileURI ist the whole URi: https://<storagecontainername>.file.core.windows.net/<filesharename>/filepath?<SASToken>

I tried it like that before but didn’t put the SAStoken again.

IMO they should rewrite their functions 🤷

But at the same time I still couldn’t get the Rename function to work.

So I wrote my own lil Rename function by calling the API myself.

LikeLike