This post is borned to signal a problem that I’ve recently found in a customer and I think that sharing it to everyone could help.

Azure Functions support the Blob storage trigger, that permits you to automatically start a function when a new or updated blob is detected into a blob container in a storage account.

When you create a blob-triggered Azure Functions, you can have different types of bindings.

Stream binding:

[Function(nameof(BlobStreamFunction))]

public async Task BlobStreamFunction(

[BlobTrigger("blobcontainer/{name}")] Stream stream, string name)

{

using var blobStreamReader = new StreamReader(stream);

var content = await blobStreamReader.ReadToEndAsync();

_logger.LogInformation("Blob name: {name} -- Blob content: {content}", name, content);

}

BlobClient binding:

[Function(nameof(BlobClientFunction))]

public async Task BlobClientFunction(

[BlobTrigger("blobcontainer/{name}")] BlobClient client, string name)

{

var downloadResult = await client.DownloadContentAsync();

var content = downloadResult.Value.Content.ToString();

_logger.LogInformation("Blob name: {name} -- Blob content: {content}", name, content);

}

Byte array binding:

[Function(nameof(BlobByteArrayFunction))]

public void BlobByteArrayFunction(

[BlobTrigger("blobcontainer")] Byte[] data)

{

}

String binding:

[Function(nameof(BlobStringFunction))]

public void BlobStringFunction(

[BlobTrigger("blobcontainer")] string data)

{

}

The standard Visual Studio Azure Functions template for blob-triggered functions actually is using Stream binding for both Azure Functions models (In-process and Isolated).

But if you used the new isolated model (recommended) before March/April 2023 and you created a blob-triggered Azure Functions, the string binding was the default in use in the standard template (and Stream binding was not supported).

What’s the problem on these binding types?

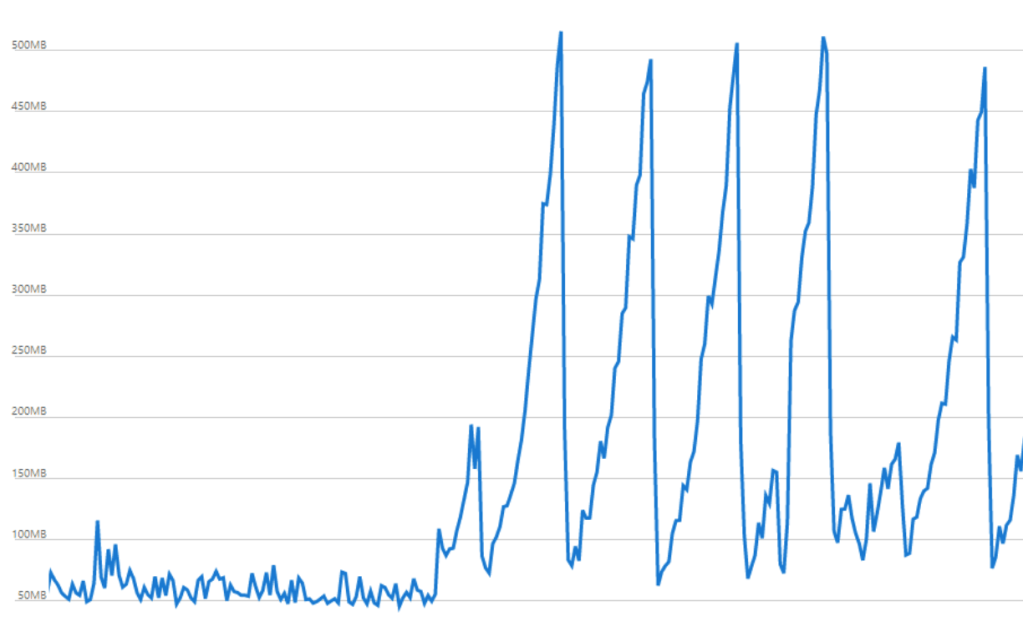

Here there’s a sneaky and hidden problem. When using the string binding (but also the Byte array binding) the content of the blob that triggers the function is fully loaded in memory, creating spikes on Azure Functions memory usage:

And this creates a lot of problem when you’ve lots of blobs to handle or when you’re handling very large blobs.

The blob trigger internally uses queues and the maximum number of concurrent function invocations is limited to 24 invocations. This limit applies separately to each function that uses a blob trigger.

The Consumption plan (used in this customer’s case) also limits a function app on one virtual machine (VM) to 1.5 GB of memory at max (shared among all the functions within the Function App). Memory is used by each concurrently executing function instance and by the Functions runtime itself. If a blob-triggered function loads the entire blob into memory, the maximum memory used by that function just for blobs is 24 * maximum blob size.

What happened here?

When the customer started using large blob files, the Azure Functions started using too much memory and so it become unreliable (lots of System.OutOfMemoryException when processing files):

This happened because if the blob is large and/or there are other function invocations executing concurrently for processing other big files uploaded on that blob storage, the function will exceed the memory restrictions of the Functions Runtime.

How to solve this?

Simply by changing the binding type. Binding to string, or Byte[] is only recommended when the blob size is small. If you create a Stream or BlobClient binding, the blob is not loaded into memory and you can process it incrementally.

Generally speaking, it’s always better to use a Stream or BlobClient type when using a Blob-trriggered Azure Function (and fortunately now Stream is the default for every newly created function).

I suggest also to use Application Insights connected to your Azure Function app and monitor exceptions with the following KQL query:

FunctionAppLogs | where ExceptionDetails != "" | where FunctionName == "<Your Function Name>" | order by TimeGenerated desc

Please note that JavaScript and Java functions load the entire blob into memory also today, so be aware if you’re using these platforms for creating Blob-triggered Azure Functions.

There are also other things to say about Azure Functions Blob triggers, but probably in a next post…

Thanks for sharing Stefano. Important thing to consider.

My question is: won’t the following (Stream or BlobClient options) still load the blob in memory and cause similar memory spike?

Stream:

var content = await blobStreamReader.ReadToEndAsync();

BlobClient:

var downloadResult = await client.DownloadContentAsync();

var content = downloadResult.Value.Content.ToString();

What is the good approach to tackle this instead of loading the whole blob in memory? Any suggestion?

LikeLike

Streams are memory-optimized. The best approach for me for memory, performance and throughput is using Event Grid triggers listening to events on the blob storage.

LikeLiked by 1 person