AI features in Dynamics 365 Business Central are growing always more on each release wave and lots of new interesting features are planned for the next waves too.

AI features in Dynamics 365 Business Central belong to the following two main categories:

- Microsoft-owned AI features: these features work with a Microsoft-owned AI model in a Microsoft’s owned Azure OpenAI instance and are SaaS-only.

- Partner/Customer-owned AI features: these are AI features created by partners or customers using the Copilot Developer Toolkit in AL language. They use a partner’s or customer’s owned AI model on partner’s or customer’s Azure OpenAI instance.

In the last months I’ve done (and I will do) a tour across Italy and other european countries showing how to use these features for real-world projects also from a different perspective than the traditional AL methods. Talking with a large number of customers and partners, I’ve collected some interesting and also curious questions and recently I’ve spent the last week working and brainstorming mainly on two of them:

- Is it possible to use a different AI platform with Dynamics 365 Business Central (no OpenAI and Azure OpenAI)?

- Is it possible to use AI models in a fully offline way if we have Dynamics 365 Business Central on-premise?

Answer on both question is YES! You can…

But what intrigued me was the last question: Is it possible to use AI models in a fully offline way if we have Dynamics 365 Business Central on-premise?

Talking with this customer more in-depth, they want to be able to use an AI model in a fully offline way, without sending requests to an online AI instance like Azure OpenAI, OpenAI or others. Prompts, AI model and AI response must be fully offline generated.

Large language models (LLMs) have created exciting new opportunities to be more productive and creative using AI, but their size means they can require significant computing resources to operate and this is the reason why these LLMs are provided as SaaS services (like Azure OpenAI).

While those models will still be the gold standard for solving many types of complex tasks, Microsoft has been recently developing a series of small language models (SLMs) that offer many of the same capabilities found in LLMs but are smaller in size and are trained on smaller amounts of data.

Small language models are designed to perform well for simpler tasks, are more accessible and easier to use for organizations with limited resources and they can be more easily fine-tuned to meet specific needs.

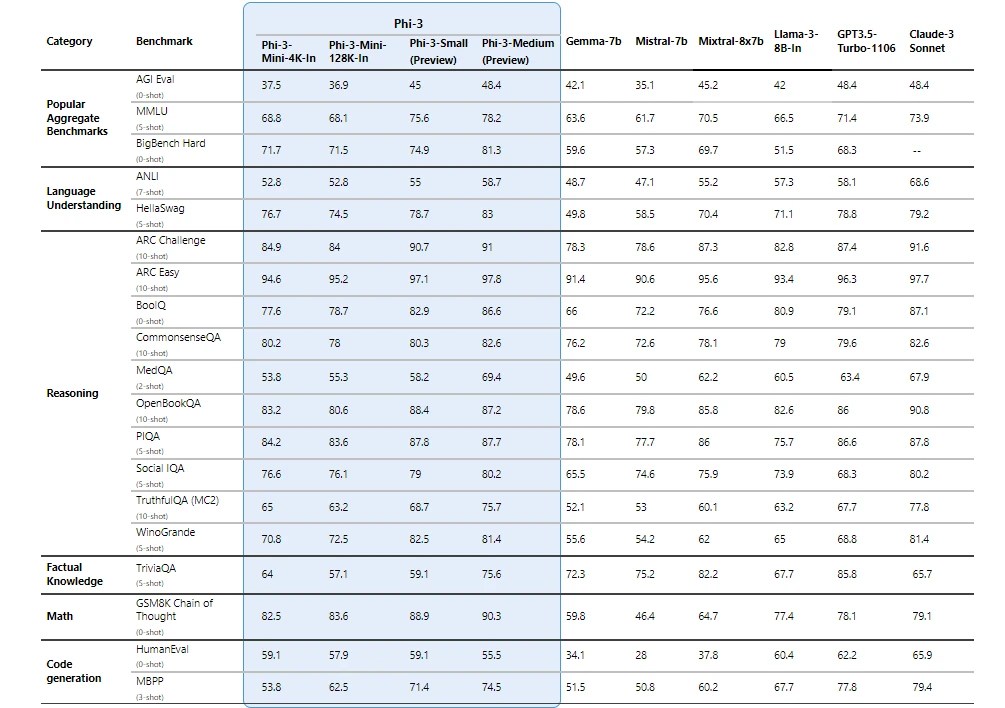

On end of April, Microsoft announced Phi-3, a family of open AI models developed by Microsoft. Phi-3 models are the most capable and cost-effective small language models (SLMs) available, outperforming models of the same size and next size up across a variety of language, reasoning, coding, and math benchmarks.

In particular, Phi-3 Mini is a lightweight artificial intelligence model developed as a part of its small language model (SLM) family. It is the first of three SLMs that Microsoft plans to launch in the near future, with the other two being Phi-3 Small and Phi-3 Medium. Phi-3 Mini has a capacity of 3.8 billion parameters and is designed to perform simpler tasks, making it more accessible and affordable for businesses with limited resources. Phi-3 Mini has been trained on a dataset that is smaller than that of large language models such as GPT-4. It is a part of Microsoft’s broader initiative to introduce a series of SLMs that are tailored for simpler tasks, making them ideal for businesses with fewer resources.

The Phi-3 models family will be the following:

- Phi-3-mini (3.8 Billion Parameters): This is the smallest and most versatile model, well-suited for deployment on devices with limited resources or for cost-sensitive applications. It comes in two variants: 4K Context Length (ideal for tasks requiring shorter text inputs and faster response times) and 128K Context Length(that boasts a significantly longer context window, enabling it to handle and reason over larger prompts).

- Phi-3-small (7 Billion Parameters): scheduled for a future release, it offers a balance between performance and resource efficiency.

- Phi-3-medium (14 Billion Parameters): it will be the most powerful model, targeting tasks requiring the highest level of performance.

Phi-3 models significantly outperform language models of the same and larger sizes on key benchmarks (see Microsoft’s benchmark numbers below, where higher is better). Phi-3 Mini does better than models twice its size, and Phi-3-small and Phi-3-medium outperform much larger models, including GPT-3.5T:

Why I’m talking about these small language models (SLMs) in this post? Mainly because:

- They’re small in size and don’t require extreme resources.

- They are powerful like the traditional LLMs for many tasks.

- They can work offline or on device.

- You can have generative-AI features without cost.

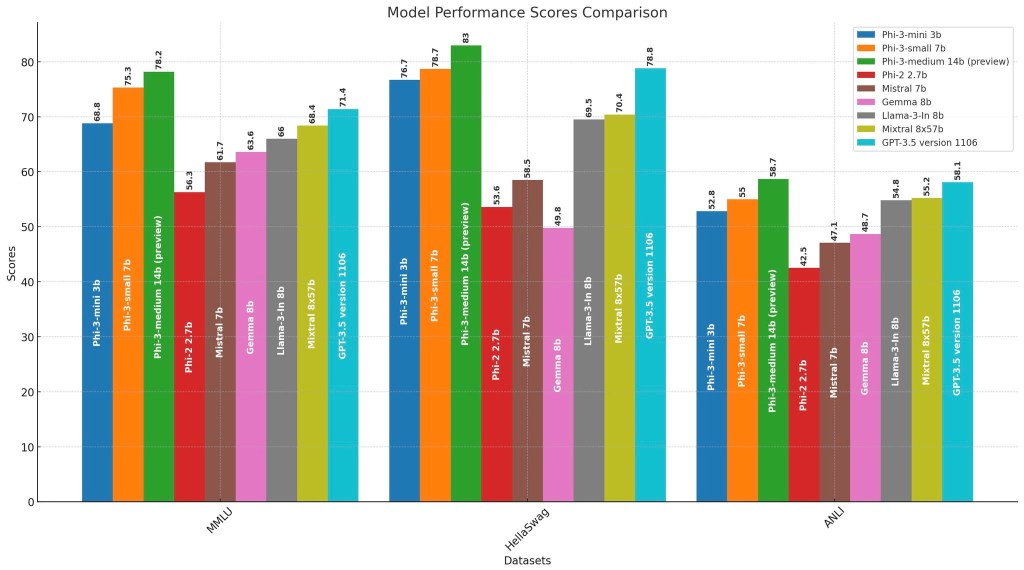

Phi-3-Mini is performing as well as some of the models that are 100 times its size, as you can see also from the following tests (not coming from Microsoft) that show how these models perform with some common AI datasets:

This model does not require large compute hardware like GPU and can literally run on any device. The cherry on the cake is that it is free and open-source for academic and commercial use!

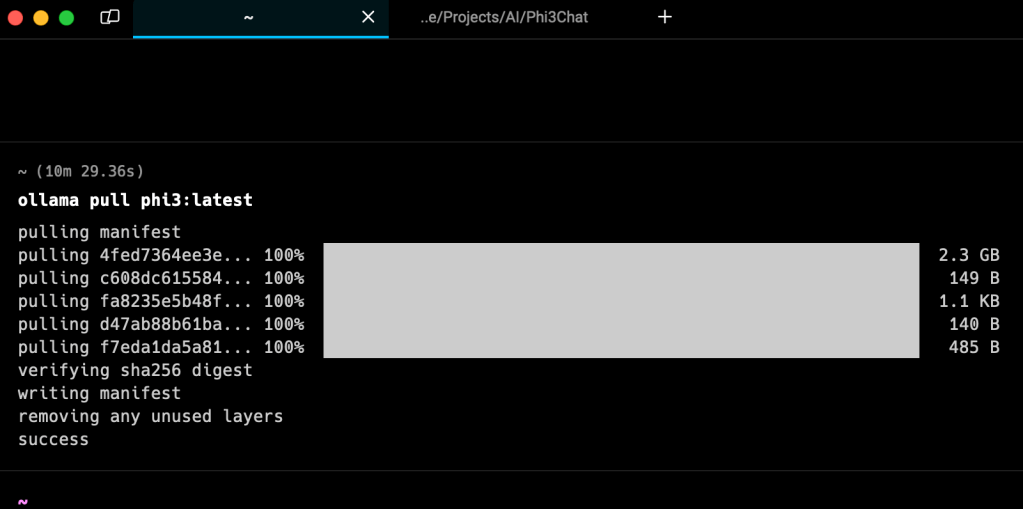

So I had an idea: why not integrating Dynamics 365 Business Central on-premise with a SLM like Phi-3 running offline through Ollama?

Ollama is a tool that allows you to run SLMs and LLMs on your own local machine and on different platforms (Windows, MacOS, Linux). This is perfect to run my AI SLM locally…

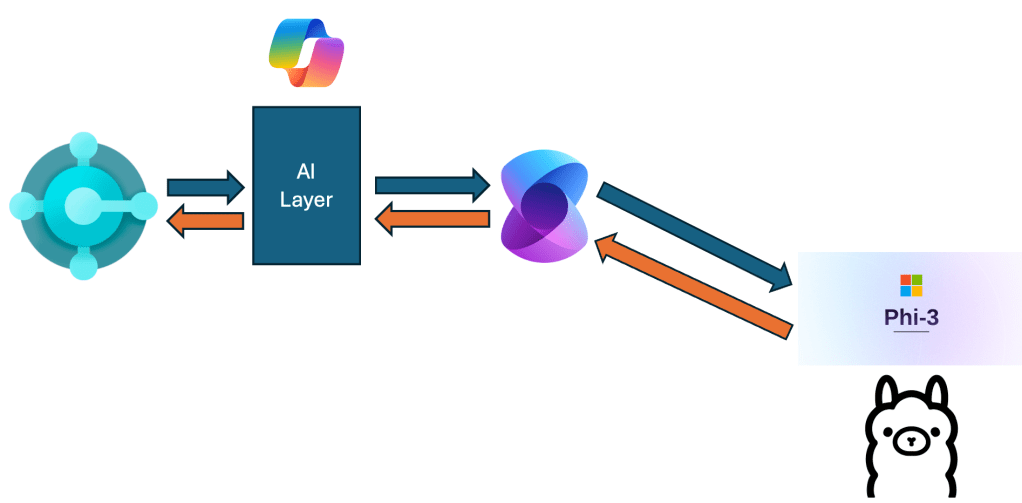

I’ve then spent some hours creating the following solution:

In this solution, Dynamics 365 Business Central Copilot is talking with the Phi-3-mini latest model deployed through Ollama on my own local server:

To orchestrate the AI calls to the Phi-3 AI model, I’m using the AI tool that I love the most for many real-world scenarios: Semantic Kernel.

If someone was last year at my AI session at BCTechdays in Belgium, I talked a bit about a promising preview tool called Semantic Kernel. Now this Microsoft’s AI orchestration tool is grown a lot and I personally use it in lots of real world big AI projects for many different reasons that I will discuss on the next events that I’ve on my schedule, together with the technical details of this solution.

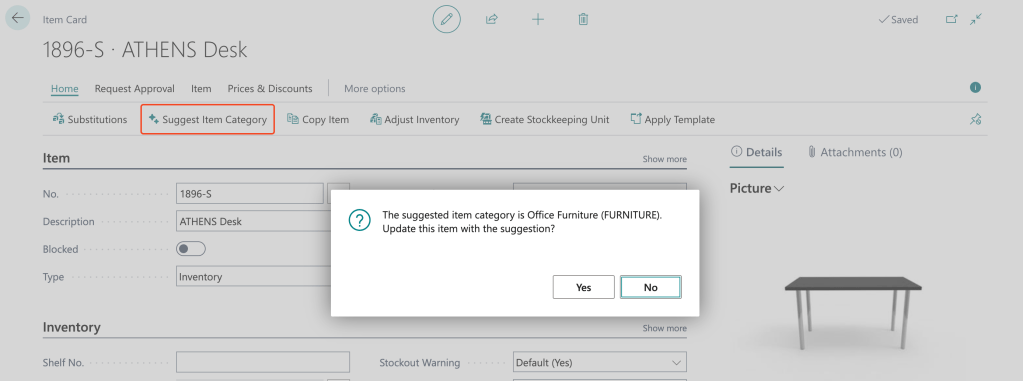

As a final result of this game, now I have a Dynamics 365 Business Central Copilot fully working offline with an AI small language model like Phi-3-mini (here it in action with a Copilot feature for suggesting an Item category for an items accordingly to the available Item Categories in Dynamics 365 Business Central):

I think it’s absolutely cool…

Obviously, choosing the right language model depends on an organization’s specific needs, the complexity of the task and available resources. Small language models are well suited for organizations looking to build applications that can run locally on a device or offline and where a task doesn’t require extensive reasoning or a quick response is needed.

Phi-3 is absolutely a great SLMs, with the full power of traditional GPT models for many tasks.

If in the next weeks/months if you will be at one of the “AI Tour” days I’ve in my schedule, you will see also this solution in details. Maybe it can fit some of your customer’s needs too…