In a previous post I wrote about how you can monitor your Azure OpenAI usage with Azure Monitor and Application Insights. Monitoring Azure OpenAI usage is an important practice to adopt and if you plan to start using generative AI features with Dynamics 365 Business Central, please also activate AI monitoring.

In this post I want to talk about another interesting (and very easy to setup) tool for monitoring Azure OpenAI usage. Microsoft has recently released on Github a template for an Azure Workbook that you can install in your subscription and start using to monitor Azure OpenAI. You can download the workbook from here.

Installing the workbook on your Azure subscription is very easy. From Azure Portal, select Azure Workbooks, then click on the Create button and select New.

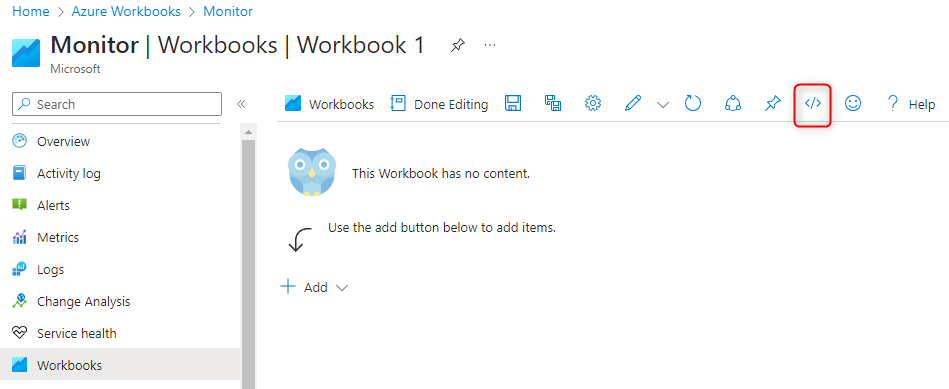

In the workbook creation page, open the Advanced Editor using the </> button on the toolbar:

Here copy the content of the workbook template previously downloaded and click Apply. The save the newly created Azure Workbook (select subscription, resource group and location). Now you’re ready to go!

The workbook has 3 main panels.

The Overview panel provides an high level overview of your Azure OpenAI resource. The information is leveraging KQL to query Azure resource graph. It provides an holistic view of the OpenAI resources, cross subscriptions, resource groups, network access pattern (open or isolated) and regions. You can see all your subcriptions, apply filters for subscription, Azure OpenAI service instance and time range:

You can also see all the Azure OpenAI instances details per each subscription:

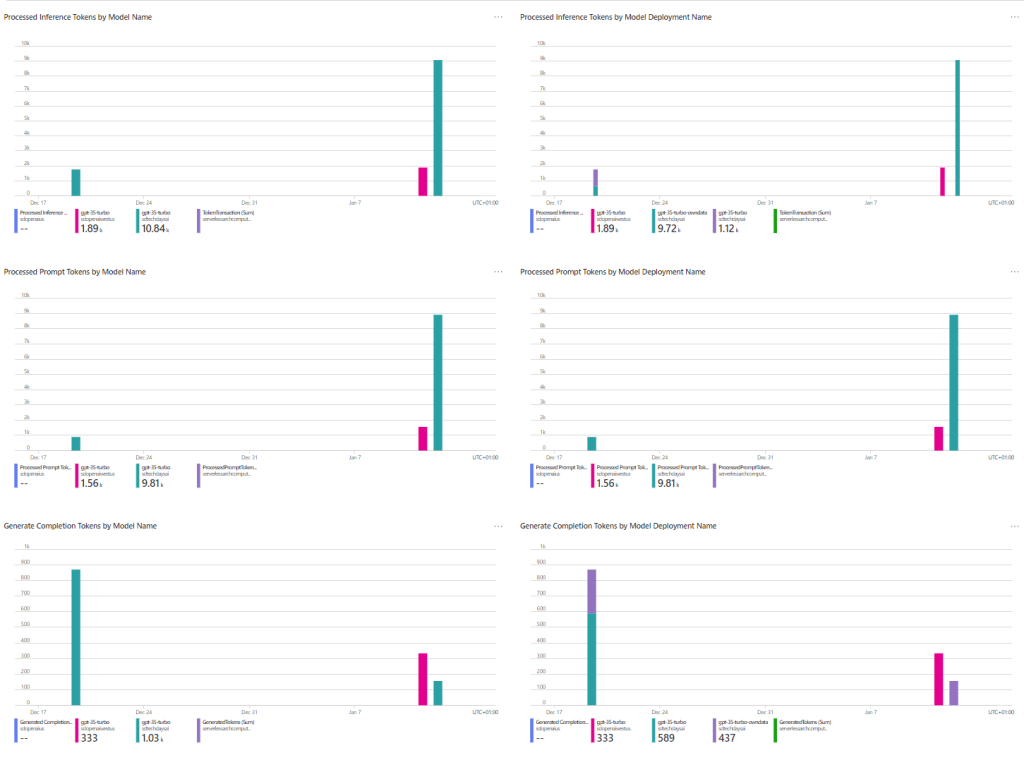

The Monitor panel gives you a comprehensive view of all metrics, cross multiple subscriptions and resources. It includes the following information:

- Http requests – by multiple dimensions: model name & version, status code, model deployment name, operation and api name and region.

- Token based usage – multiple metrics: Processed Inference Tokens, Processed Prompt Tokens, Generate Completions Tokens, Active Tokens; these are displayed with couple of dimensions such as, model name and model deployment name.

- PTU Utilization – by multiple dimensions: model name & version, streaming type and model deployment name.

- Fine-tuning – it shows the ‘Processed FineTuned Training Hours’ metric by two dimensions: model name and model deployment name.

The HTTP Request panel is extremely useful to monitor how your users are using the various models and how requests are sent to the Azure OpenAI model during the day (in the context of Dynamics 365 Business Central this is useful to check if you need load balancing):

The Token-Based Usage panel gives you details on the following metrics:

- Active tokens: Total tokens minus cached tokens over a period of time.

- Use this metric to understand your TPS or TPM based utilization for PTUs and compare to your benchmarks for target TPS or TPM for your scenarios.

- Processed Inference Tokens: Number of inference tokens processed on an OpenAI model. Calculated as prompt tokens (input) plus generated tokens (output).

- Processed Prompt Tokens: Number of prompt tokens processed (input) on an OpenAI model.

- Generated Completions Tokens: Number of tokens generated (output) from an OpenAI model.

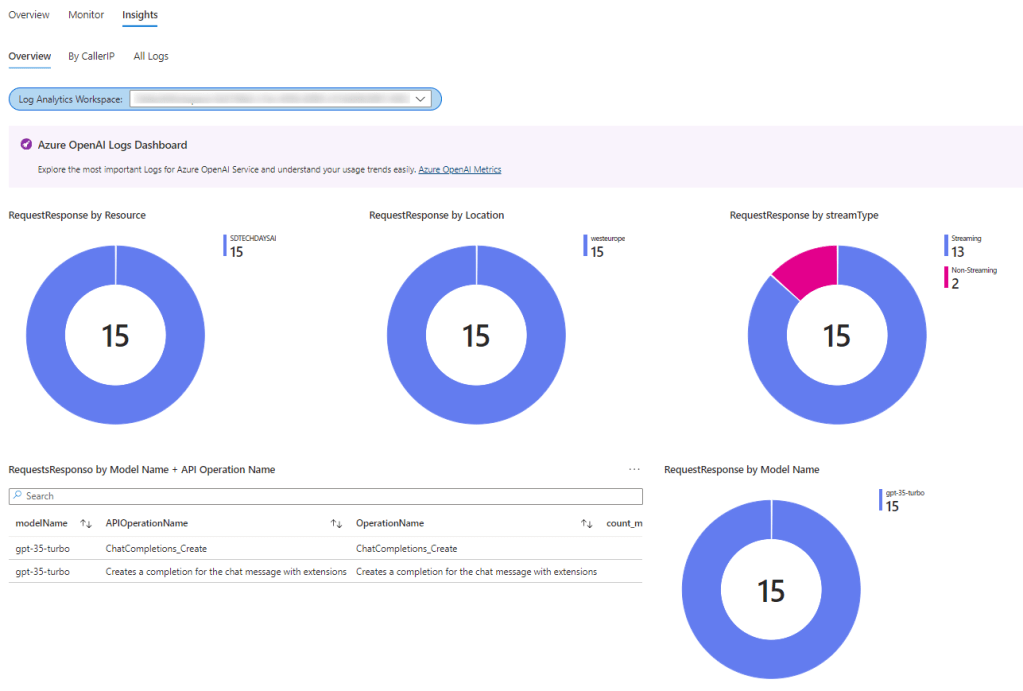

The Insights panel gives you interesting informations coming from logs. In order to use it you need to enable Diagnostic Settings for your Azure OpenAI instance (see here).

When you select the Log Analytics Workspace where your telemetry is redirected, this panel shows an aggregative view on all logs, by multiple dimensions. It includes the following information:

- Model name

- Model Deployment name

- Average Duration (in milliseconds)

- API operation name

The By Caller IP tab examines the logs by caller IP and this is useful to better understand usage patterns and traffic origins. It includes the following information:

- Request/Response (Model name, Model deployment name & Operation name)

- Average Duration

The All Logs panel provides a complete view of all logs originated from multiple subscriptions, resource groups and OpenAI resources:

Why you should act a monitoring practice for Azure OpenAI services?

If you’re starting your Azure OpenAI journey providing solutions that uses generative AI features (in Dynamics 365 Business Central and more) you should start using these monitoring features at least for the following reasons:

- Control your resource allocation (vital for fair resource distribution and for responsiveness of the models).

- Keeping track of each tenant’s service consumption for accurate billing and service verification and monetize for the relative consumption between customers.

- Checking usage patterns across working hours and eventually set up usage limits per-customer.

Now you’ve the tools…